Content generation

Overview

When you connect your AI Agent to your knowledge base and start to serve automatically generated content to your customers, it might feel like magic. But it’s not! This topic takes you through what happens behind the scenes when you start serving knowledge base content to customers.

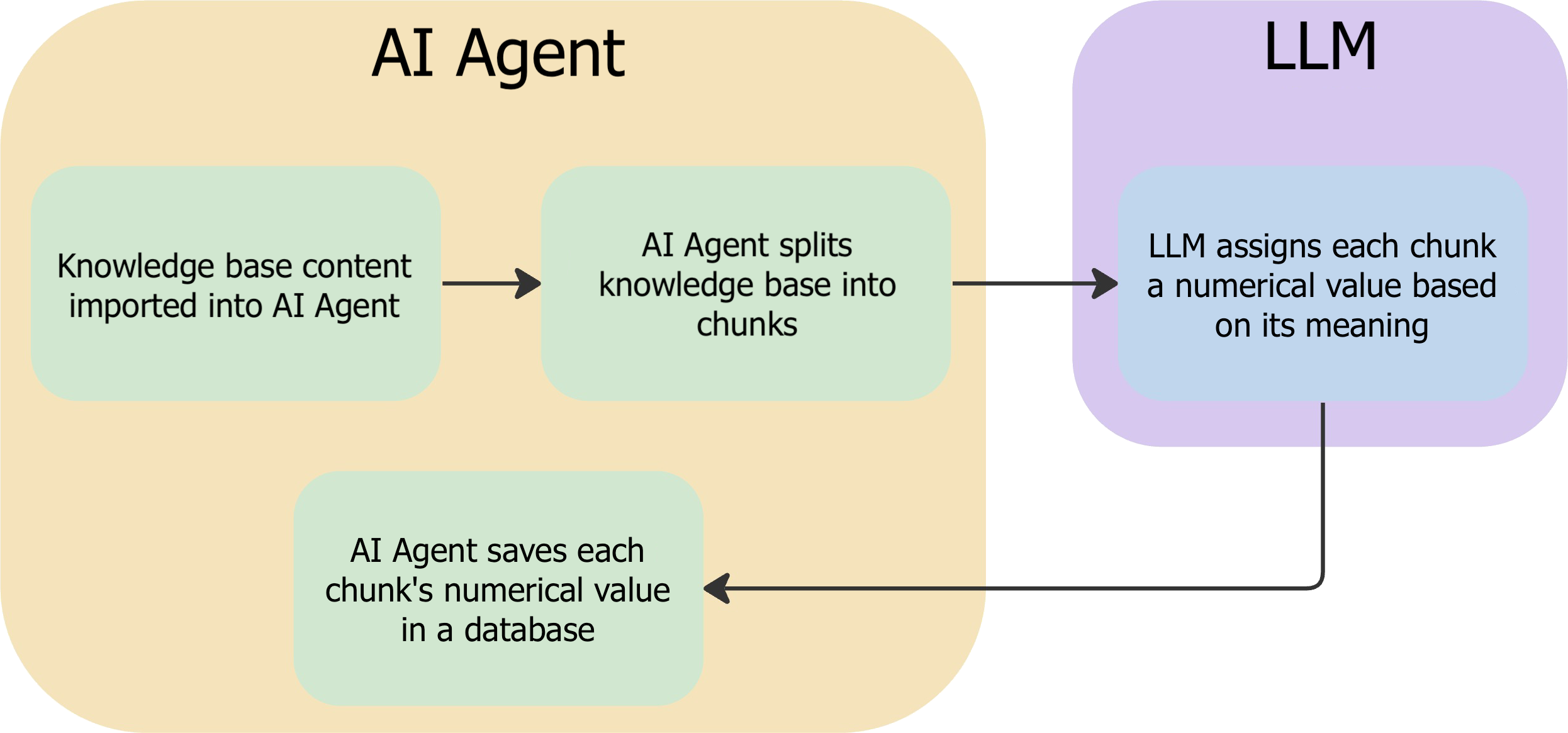

Knowledge base ingestion

When you link your knowledge base to your AI Agent, your AI Agent copies down all of your knowledge base content, so it can quickly search through it and serve relevant information from it. Here’s how it happens:

-

When you link your AI Agent with your knowledge base, your AI Agent imports all of your knowledge base content.

Depending on the tools you use to create and host your knowledge base, your knowledge base then updates with different frequencies:

-

If your knowledge base is in Zendesk or Salesforce, your AI Agent checks back for updates every 15 minutes.

- If your AI Agent hasn’t had any conversations, either immediately after you linked it with your knowledge base or in the last 30 days, your AI Agent pauses syncing. To trigger a sync with your knowledge base, have a test conversation with your AI Agent.

-

If your knowledge base is hosted elsewhere, you or your Ada team have to build an integration to scrape it and upload content to Ada’s Knowledge API. If this is the case, the frequency of updates depends on the integration.

-

-

Your AI Agent splits your articles into chunks, so it doesn’t have to search through long articles each time it looks for information - it can just look at the shorter chunks instead.

While each article can cover a variety of related concepts, each chunk should only cover one key concept. Additionally, your AI Agent includes context for each chunk; each chunk contains the headings that preceded it.

-

Your AI Agent sends each chunk to a Large Language Model (LLM), which it uses to assign the chunks numerical representations that correspond to the meaning of each chunk. These numerical values are called embeddings, and it saves them into a database.

The database is then ready to provide information for GPT to put together into natural-sounding responses to customer questions.

Response generation

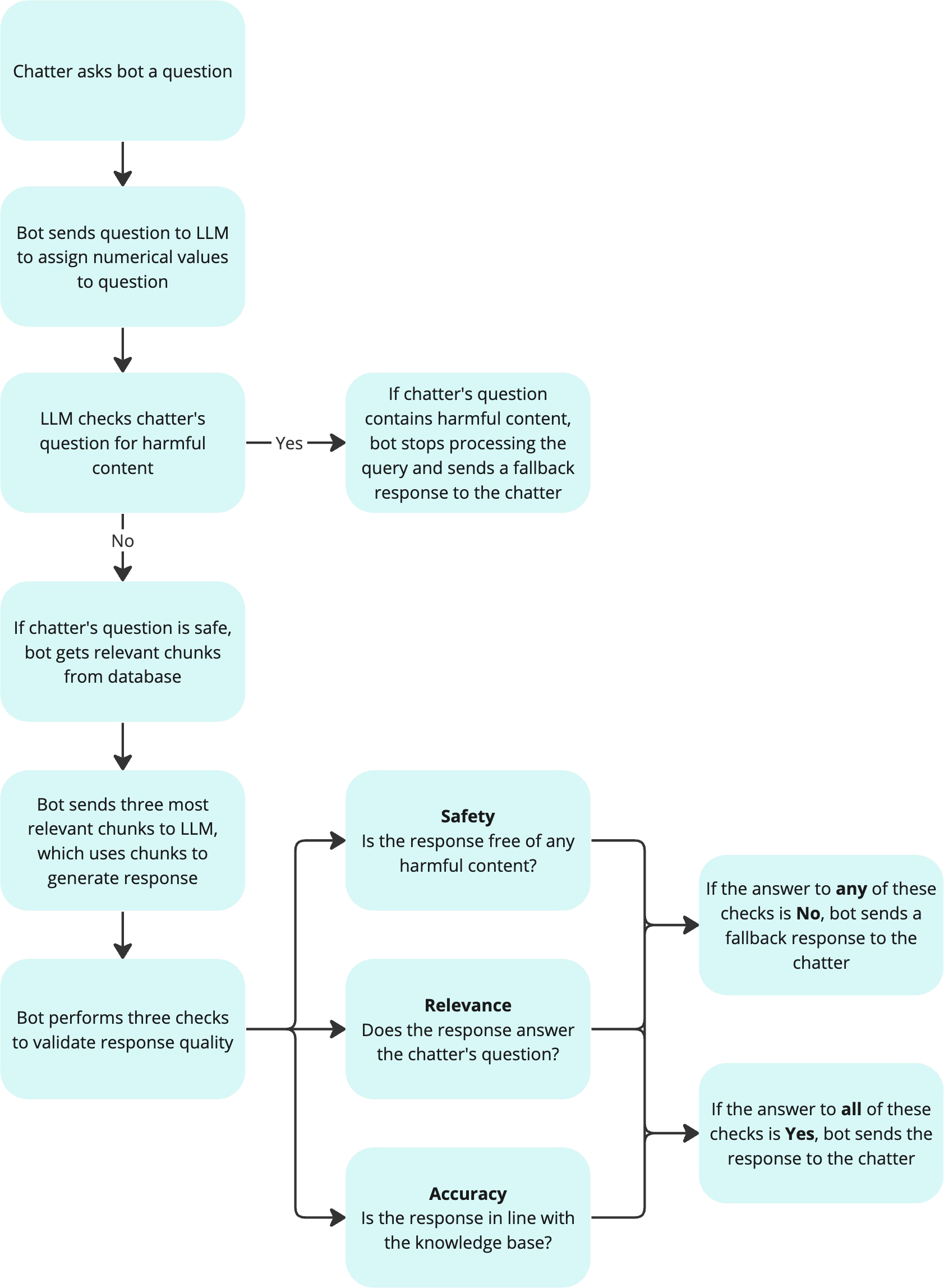

After saving your knowledge base content into a database, your AI Agent is ready to provide content from it to answer your customers’ questions. Here’s how it does that:

-

Your AI Agent sends the customer’s query to the LLM, so it can get an embedding (a numerical value) that corresponds with the information the customer was asking for.

Before proceeding, the AI Agent sends the content through a moderation check via the LLM to see if the customer’s question was inappropriate or toxic. If it was, your AI Agent rejects the query and doesn’t continue with the answer generation process.

-

Your AI Agent then compares embeddings between the customer’s question and the chunks in its database, to see if it can find relevant chunks that match the meaning of the customer’s question. This process is called retrieval.

Your AI Agent looks for the best match in meaning in the database to what the customer asked for, which is called semantic similarity, and saves the top three most relevant chunks.

If the customer’s question is a follow-up to a previous question, your AI Agent might get the LLM to rewrite the customer’s question to include context to increase the chances of getting relevant chunks. For example, if a customer asks your AI Agent whether your store sells cookies, and your AI Agent says yes, your customer may respond with “how much are they?” That question doesn’t have enough information on its own, but a question like “how much are your cookies?” provides enough context to get a meaningful chunk of information back.

If your AI Agent isn’t able to find any relevant matches to the customer’s question in the database’s chunks at this point, it serves the customer a message asking them to rephrase their question or escalates the query to a human agent, rather than attempting to generate a response and risking serving inaccurate information.

-

Your AI Agent sends the three chunks from the database that are the most relevant to the customer’s question to GPT to stitch together into a response. Then, your AI Agent sends the generated response through three filters:

- The Safety filter checks to make sure that the generated response doesn’t contain any harmful content.

- The Relevance filter checks to make sure that the generated response actually answers the customer’s question. Even if the information in the response is correct, it has to be the information the customer was looking for in order to give the customer a positive experience.

- The Accuracy filter checks to make sure that the generated response matches the content in your knowledge base, so it can verify that the AI Agent’s response is true.

-

If the generated response passes these three filters, your AI Agent serves it to the customer.