Machine learning Q&A

We hosted an Ask Me Anything session with Ada’s Machine Learning (ML) team. Below is a summary of some of the questions our team answered.

Q: How many Training Questions should my Answers have?

We have found that in general, you should aim to have between 15 and 20 training questions per Answer, and each training question should contain multiple words (avoid single-word training questions). You should aim to have balanced training question sets, as some Answers having drastically more training than others can result in more inaccuracies.

Q: How should I train queries that often include product names? (i.e. do you have apples? can i buy oranges from you?) How does the ML handle technical/business jargon?

If the specific name or term of the query is important for the Answer, one of the models our ML uses for answer prediction is more sensitive to where keywords appear in training. Another model we use is more sensitive to semantic meaning and will do some level of generalization to other words that are semantically similar. Unfortunately there is no current way to indicate when a term should be specific or general, but we are aiming to build out this functionality in the future!

Q: How are typos accounted for?

There are two ways in which typos are accounted for. First, our general ML models (that enable things like synonym detection and contextual word meaning) are trained on text containing hundreds of millions of words. The text data that these models are trained on is conversational and therefore contains several occurrences of typos. In this way, our general models are able to learn the meaning of typos that they’ve seen before in their training. We have other models that pay attention to the sub-word information contained in training. Specifically these models look at character level tri-grams (sequences of three characters) within training. So if an answer is trained on multiple occurrences of “impossible”, the model recognizes that “impossilbe” and “imposible” both contain similar character sequences to “impossible.”

Q: Are training changes immediately reflected in the chatter experience or is there a lag time?

There is latency in model training after adding/modifying training data that is proportional to the amount of total training data: more Training Questions will require more time to train the bot on them. In general, it is probably good to wait at least 15 minutes after adding/modifying training data before testing it.

Q: At what clarification rate percentage should I be concerned about possibly having a lot of overlapping training questions?

There’s definitely a balance to be struck with clarification rate. On the one hand, there is a baseline of chatter messages that should always receive clarifications - think of a scenario where a user says “I want to reset my password and I also want to upgrade my plan.” In cases like these, your answer training may not overlap, but the user’s question is certainly relevant to both answers. This is what you might refer to as a “healthy” clarification. On the other hand, if your bot’s training contains too many overlaps, your chatters will incur a significant number of non-desired clarifications. For these cases, our Training Optimizer feature should help. It works by identifying Answers that are frequently co-occurring in clarifications, and highlighting the specific Training Questions that are overlapped. Try it out and let us know what you think!

Q: Can we train the bot with non-English Questions?

Yes! Thanks to our Multilingual Training feature, you can now add training in any language that your bot supports.

Q: What happens if I have both auto-translate enabled and manually entered content?

The manually entered bot content will always take priority. If it exists for an Answer, the auto-translated content will never be sent.

Q: How does our ML compare to other NLP products on the market?

We think of our ML product much like a car: there is an advanced technological engine that is being driven by human operators. For the most part, because of trade secrets and non-disclosures, companies cannot generally see underneath the hood at other company’s engines for direct comparison. However, if we really care about getting top performance, Ada takes a holistic approach optimizing a powerful engine with state of the art ML/NLP, while making it accessible and usable to the bot builders who drive it. That being said, historically we have performed rigorous benchmarking against other vendors in the space and found that we significantly out-performed them in the task of answer prediction. This is in large part due to the data and configurations we train our models on. More details here: https://www.ada.cx/platform/conversational-ai

Q: Should we include capitalizations? Avoid it? Do capitalizations have any particular meaning for the model?

None of our current models consider casing of characters so capitalization would not matter. However, it is recommended to use capitalizations wherever it makes sense grammatically, for example, proper nouns), in case we decide to make use of them in the future. Currently, we always use the lowercase version of the Training Questions.

Q: Does punctuation need to be included or removed? If punctuation should be removed, are existing Training Questions that have punctuation detrimental to the model?

Some of our models are more sensitive to punctuation than others. In general, you can preserve punctuation as naturally as it appears in the customer messages you expect to see — if our models need to remove punctuation for any reason on the back end, we are able to automatically remove it.

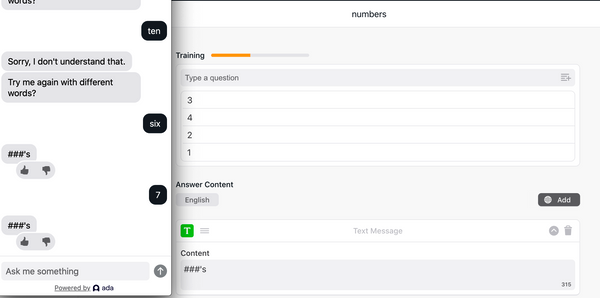

Q: How do numbers and special characters impact matching accuracy?

Our models are not optimized for training on numbers and special characters, but they do have some awareness and ability to make predictions on them. Having only one Answer that is trained with numbers can potentially negatively impact training since users may use numbers frequently to refer to a variety of things. Include numbers in your training where the would naturally occur.

Q: If an answer has approximately 100 questions trained to it, and we see 30 or so Questions from chatters that were not understood, but which should have triggered that answer, what should we do/what should we be looking at to identify why they were not understood?

In the case where you’ve already trained significant questions to an Answer but you see additional questions that should also be trained there, try your best to maintain balance between the number of Training Questions in that Answer and all others. If there are Training Questions that should be added, perhaps consider if others can be removed.

It’s important to keep in mind that there is a constant precision vs. recall tradeoff when training answers. On one hand you want to be able to answer as many questions as possible, but on the other hand you also want to get as many of the answer predictions you make correct as possible. Growing your training without bound can easily lead to issues where Answers become overtrained and gain a “black-hole” gravitation force: over-matching user Questions that should be Not Understood or sent to other Answers. This happens because those “black-hole” Answers end up containing far more language variations than other Answers, leading the model to believe that it is relevant to more phrasings of user Questions. In most cases the answer to “should the 20 that match closer to the question not provide the bot with enough confidence to better match the response?” is yes. As long as your Answer has approximately 20 Questions, your model should be able to make use of that information to make subsequent predictions. We are actually currently in the process of updating our models to inherently protect against these cases by aggregating bot Training Questions by Answer behind the scenes. Training balance will continue to remain important, but this should help in many cases.

Q: Our bot is now the front-line for our company, and leadership wants to add more intents. As we add more intents, our leadership have noticed more Not Understood questions that we would have expected to be answered by the bot. How should we manage adding new intents and balance training questions so that we keep healthy recognition rate?

As a general best-practice of bot-maintenance, we should periodically do an audit of all Answers in the bot and delete old/unused Answers. This audit should also expose duplicate/overlapping answers that we could try to merge together. This should result in only the relevant content and training remaining in the bot.

Q: How does the clarification/suggestions feature work?If there is a close prediction percentage between two Answers will it give the top 2 or 3 Answers or is there more logic behind this?

The clarifications feature is triggered when a bot is not confident enough that its top Answer prediction is the right one to send, but it thinks that at least one of its top Answer predictions is relevant. Low confidence can either occur because there are two top predictions that both have a high probability, or if there is only one prediction with a high probability, but it is not quite high enough.

Q: If a chatter asks my bot a question, does my bot only take into account its own training data, or is there a larger pool of training data that is taken into consideration before a response is sent to the chatter?

There are multiple models involved in answering prediction. One involves learning the semantic meaning of each word so the bot can interpret sentences; this model does benefit from aggregated training. Another model classifies the specific question to its appropriate Answer in a bot, based on the meaning of the query and Training Questions. Because each bot has its unique set of Answers and Training Questions, there is no reason for this model to look at or be trained on other bots’ training data.

Q: We want to create a new Answer Flow with the goal of catching issues such as “not working,” “not loading,” etc. What is the best way to train this Answer? Should we avoid specifying what is failing and just focus the training on indicating that something is failing (not working, not loading, spinner, etc.)?

Generally speaking, it’s good to include training data that is close to actual utterances your customers might send. So for a catch all Answer Flow like this, you can include the specific terms for each item that isn’t working/loading, and our models will be able to generalize the meaning of those terms to other ones that are similar. For example, if you were to add “Xbox not working,” “PS4 broken,” then the model would be more likely to generalize to other consoles like “Nintendo Switch isn’t working” as well.