Run an A/B test

Overview

A/B testing is an Ada dashboard tool that lets you gauge your audience’s response to variants of a single Answer. A/B Testing helps you measure the impact of changes to the bot experience on your most valuable CX KPIs.

This feature may not be included with your organization’s subscription package. For more information, see Ada’s Pricing page, or contact your Ada team.

A/B testing works in conjunction with the Events feature. You create an Event to track the business objective you’d like to achieve with a specific Answer. You’ll then create up to four variants of that Answer’s content. Once you start the test, chatters who encounter the test Answer are randomly assigned to one of the Answer’s variants. A report records the number of times per variant chatters trigger your Event. Use this report to determine which variant is the most effective at achieving your business objective.

Configure A/B testing

Before you configure your A/B test, know what business objective you want to achieve.

For example:

-

Increase the number of meetings booked.

-

Reduce the number of subscription cancellations.

-

Entice more chatters to submit an application.

-

Improve the rate users reply to my bot.

Next, develop a question that you want answered. Take a look at the following examples to give you an idea of what you could ask:

-

Which Answer content will result in more booked meetings?

-

Which subscription change offer improves customer retention the most?

-

Does a text-only Answer used in a Campaign result in more applications than an Answer with video?

-

What Campaign message results in more users replying to my bot?

Finally, create an Event to measure the frequency a business objective is achieved. Events do this by tracking specific actions performed by your customers on your website. Check out our help article Create and track chatter actions for more information.

Now you’re ready to configure your A/B test. This happens right on the Ada dashboard. There are two places on the dashboard where you can create a test:

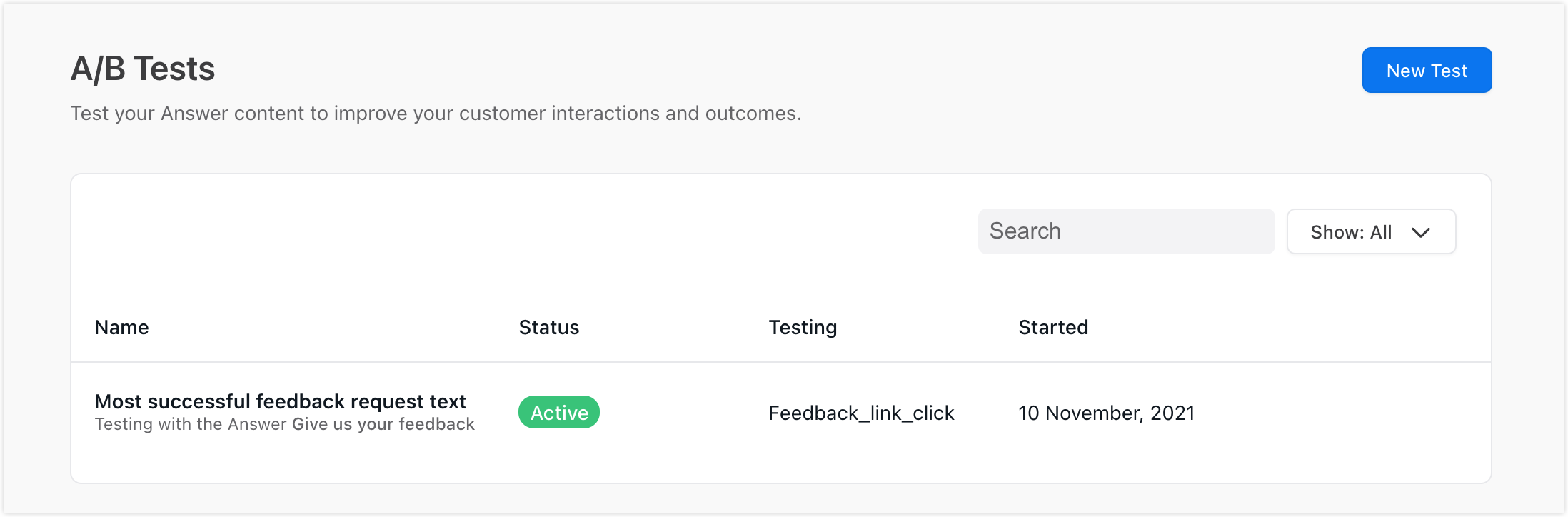

From the A/B Tests view

-

On the Ada dashboard, go to Build > A/B Tests.

-

Click the New Test button. The Create a new A/B Test dialog box displays.

-

In the Create a new A/B Test dialog box, in the Test name field, enter a name for the test.

-

Click the Answer to test drop-down menu and select an Answer to use in the test.

-

Click the Metric to measure drop-down menu and select the Event to use for determining the winning variant. See Create and track chatter actions to learn how to create Events.

Perform only one test per Event at a time. If you run more than one test per Event, your chatters could be exposed to both tests. If this happens, you’ll have no way of knowing which test caused a change in the Event being completed.

-

Click Create.

Your new test saves to the A/B Tests view as a card. Click the card to open the Answer it tracks. You can also view the report page for the test by hovering over the test card to reveal the Reports button. Click the Reports button to view the report.

From the Answers view

-

On the Ada dashboard, go to Build > Answers.

-

Select the Answer to test.

-

Click Options.

-

Select Create A/B Test.

-

In the Test name field, enter a test name.

-

Click the Metric to measure drop-down menu, then select the Event to use for determining the winning variant. See the article Create and track chatter actions to learn how to create Events.

Perform only one test per Event at a time. If you run more than one test per Event, your chatter’s could be exposed to both tests. If this happens, you’ll have no way of knowing which test caused a change in the Event.

-

Click Create.

When you click Create, the Answer is set to Hidden while you configure the test. This means that your chatters are not able to access this Answer in your bot until the test is started, at which point the Answer is set to Live.

Create test variants

The key function of an A/B test is to compare the performance of one variant of an Answer against one or more alternative variants. Once you’ve configured an Answer for testing, the original content of that Answer is grouped into a tab called Control. Add new variant tabs to house the alternate Answer content you wish to test against the control content, up to a maximum of four.

You must deactivate your Answer before you can add or remove test variant tabs. Click the toggle at the top of the Answer, beside the Answer title.

-

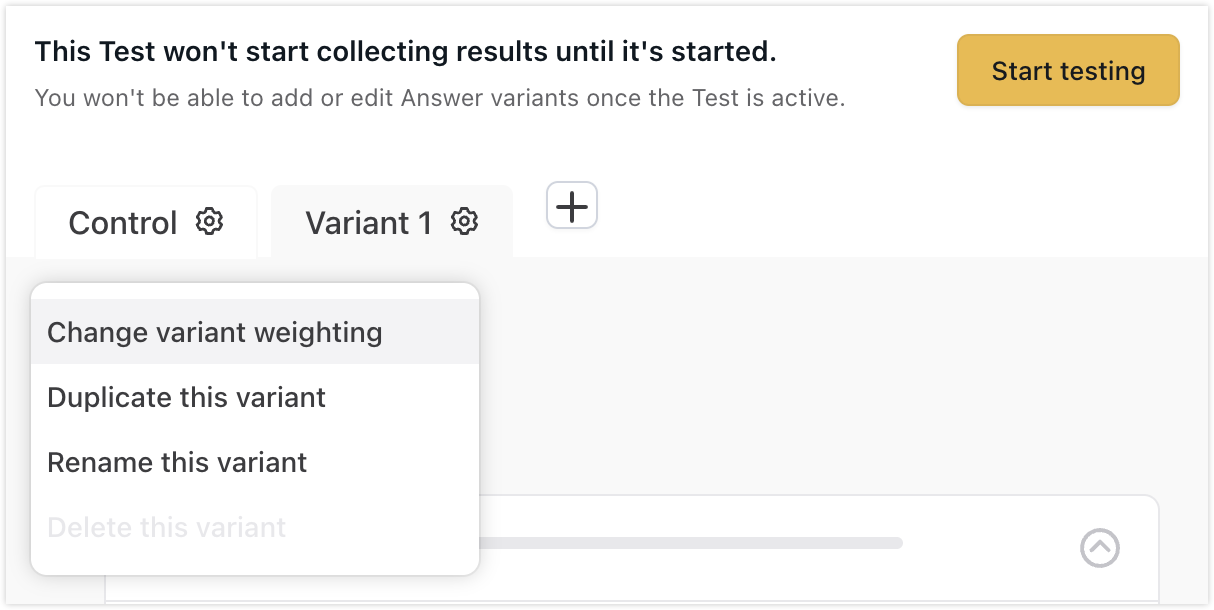

Create a new variant tab using one of the two following methods:

-

To create a blank variant tab, click the plus (+) sign to the right of the existing variant tab(s).

-

To duplicate an existing variant tab, click the gear icon in the tab you wish to duplicate, then select Duplicate this variant.

-

-

Set the variant weighting to control how often a variant is shown to your chatters. On either the control tab or any variant tab, click the gear icon, then click Change variant weighting. A dialog box opens containing sliders for each of your Answer variants. Use the sliders to set the percentage of chatters who will see each variant.

You can also change the variant weighting from your Answer’s Options menu.

-

[Optional] Rename the Answer variants. Click the gear icon in any variant tab, then click Rename this variant.

-

In the new variant tab, build the Answer variant you wish to test against the control Answer. You can create up to four variants, including the original control variant.

-

Click Save.

-

Ready to start your A/B test? At the top of the Answer view, click Start testing. You can also switch the Answer activation toggle at the top of the dashboard to Live.

-

At the top of your Answer, click Start testing to start your A/B test.

Starting a test will automatically make that Answer live and available to chatters.

Bear in mind that the more variants you add to an Answer, the longer it may take to collect enough data to decide which variant is the winner.

Delete, stop, or edit an A/B test

There are several ways to make changes to an existing A/B test.

Delete an A/B test

From the A/B Tests view

-

Go to Build > A/B Tests, then click the test card for the A/B test that you want to delete. This opens the Answer the test card tracks.

-

Click Options.

-

Click Delete this A/B Test.

-

Click the Keep this variant drop-down menu, then select the variant to keep.

-

Click Delete this A/B Test. The variant you selected to keep becomes the new permanent content for your Answer. All other variants are deleted, along with the test’s performance data.

From the Answers view

-

Go to Build > Answers, then select the A/B test Answer.

-

Click Options.

-

Select Delete this A/B Test.

-

Click the Keep this variant drop-down menu, then select the variant to keep.

-

Click Delete this A/B Test. The variant you selected to keep becomes the new permanent content for your Answer. All other variants are deleted, along with the test’s performance data.

Stop or edit an A/B test

If you want to add, delete, or make changes to variants in an active A/B test, you have to stop the A/B test first.

Any performance data gathered prior to stopping the test will no longer be meaningful. We instead encourage you to delete the existing test and to create a new A/B test with the Answer that you want to test to ensure the results are meaningful.

From the A/B Tests view

-

Click the A/B test card to select the test you want to stop. The associated test Answer opens.

-

At the top of the dashboard, beside the Answer title, click the Answer activation toggle to switch it to Hidden. This stops the A/B test.

From the Answers view

-

Select the test Answer from the Answer list.

-

At the top of the dashboard, beside the Answer title, click the Answer activation toggle to switch it to Hidden. This stops the A/B test.

A/B testing and persistence settings

Your bot assigns variants to your chatters randomly, based on the likelihood you set with the variant weighting setting. If the chatter returns to the same Answer in the future, your bot will show them the same variant—that is, unless their chatter persistence has reset.

Here is how persistence affects which variant the chatter sees if they return to the test Answer:

-

Never Forget – The user will always see the same variant. Never Forget persistence only resets if the chatter clears their browser’s cache or cookies, or if they use a private browsing tab.

-

Forget After Tab Close – If the chatter closes their browser window, the bot forgets the variant they saw and assigns them a new one randomly should they trigger your test Answer again.

-

Forget After Reload – If the chatter reloads their browser window, the bot forgets the variant they saw and assigns them a new one randomly should they trigger your test Answer again.

Understand test performance

The A/B Testing Overview report gives you an overview of your A/B test’s performance, including:

-

The number of conversations each Answer variant appeared in.

-

The number of times a chatter reaches your objective following exposure to a variant.

-

The percentage of times a chatter reaches your objective following exposure to a variant, or the conversion rate of the variant.

-

Whether your test results are Conclusive or Inconclusive. A test is marked as being Conclusive when a statistically significant winner is achieved.

There are two ways to open your A/B test report:

-

Click View report at the top right of the A/B test Answer.

OR

-

On the Ada dashboard, go to Measure > Reports and click the A/B Testing Overview report.

The objective of your A/B test is the Event you chose to indicate test success.

How to declare a test winner

Once an A/B test is marked Conclusive, you can declare a test winner.

-

At the top right of your test Answer, click the Declare a winner button.

-

In the dialog that appears, click the Choose a winning variant drop-down menu, then select the variant to declare the winner.

The variant you choose as the winner becomes the default content for that Answer. The A/B test’s status then converts to Completed. Your completed A/B test results remain available for you to reference in the A/B Tests view.